Last week I was tasked with enabling Code Coverage in our Sitecore Visual Studio solution and getting it into CodeCov (via our build pipeline). I ended up going down quite the Rabbit hole of different options and hitting a lot of brick walls along the way.

I finally figured it out and got it working though so thought I’d share my findings and what I did in the end to get this working.

TLDR - add this to your CI workflow in Github actions and adjust the settings as required.

What is Code Coverage?

In simple terms it gives you an idea of how many of your lines of code are covered by tests and therefore how confident you can be in making changes and releasing without breaking things. I’m not going to get into if this is a good idea, how accurate it is as a indication of the quality of your tests or if it’s a waste of time here – as I was just asked to get it setup and working. I don’t think were aiming for 100% code-coverage but we want to know the level of coverage we have and where we need to improve it. By the way the header image above is a lie (I hacked it together) – 100% sure looks nice though :-).

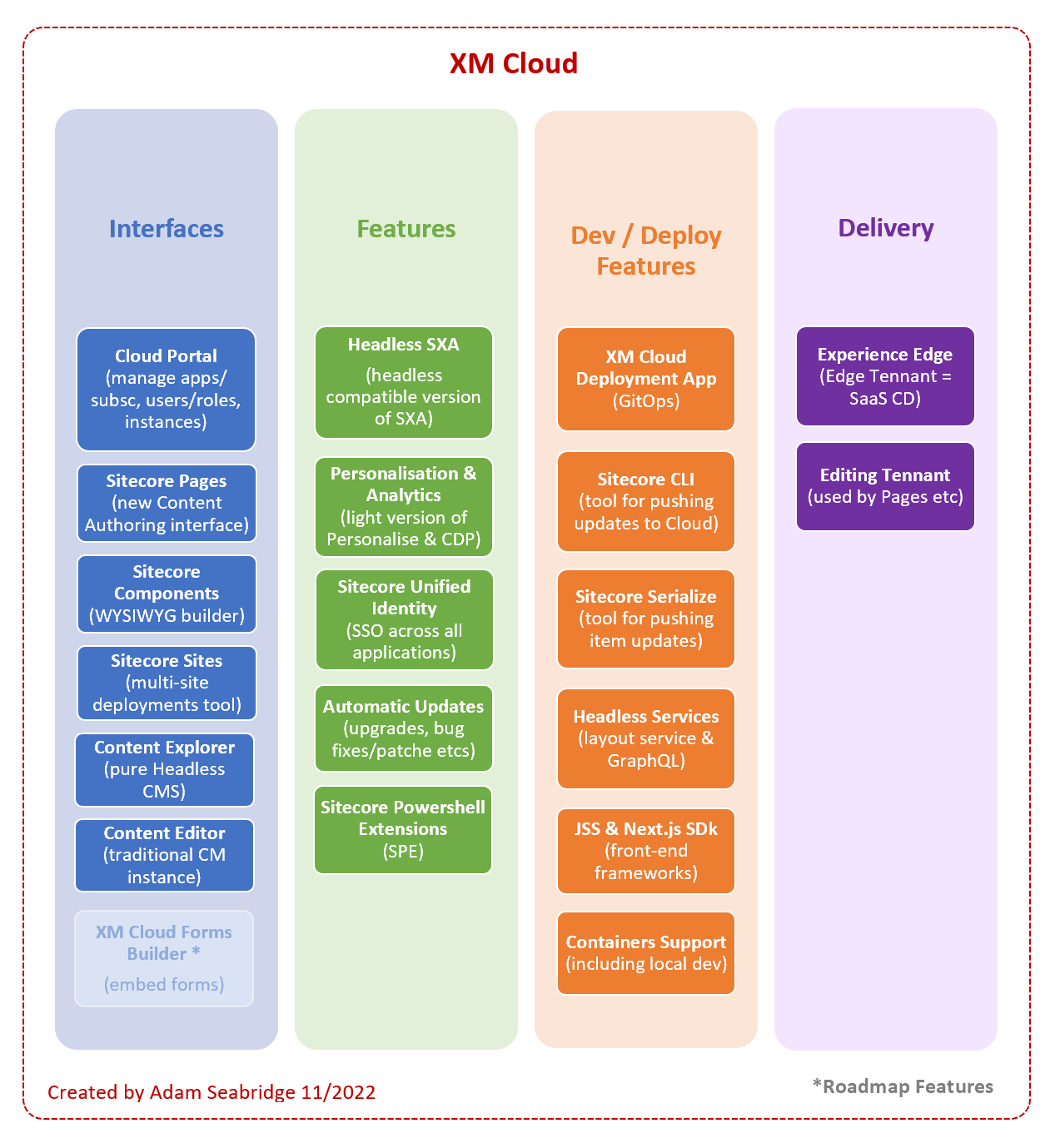

What Code Coverage options are there?

There are quite a few, but some of them are paid for. Given the cost cutting across the board at the moment I felt free ones were best to investigate first. The ones I looked at were as follows:

- AltCover – free and opensource

- Coverlet – free, lightweight, opensource

- dotCover – paid for (Jetbrains)

- NCover – paid for (now in maintenance mode)

- NDepend – paid for

Selected Tools

Read more below on reasoning but in the end I went with the following:

- Coverlet Console

- XUnit (we were already using this to run our tests)

- ReportGenerator

- GitHub Actions

- CodeCov

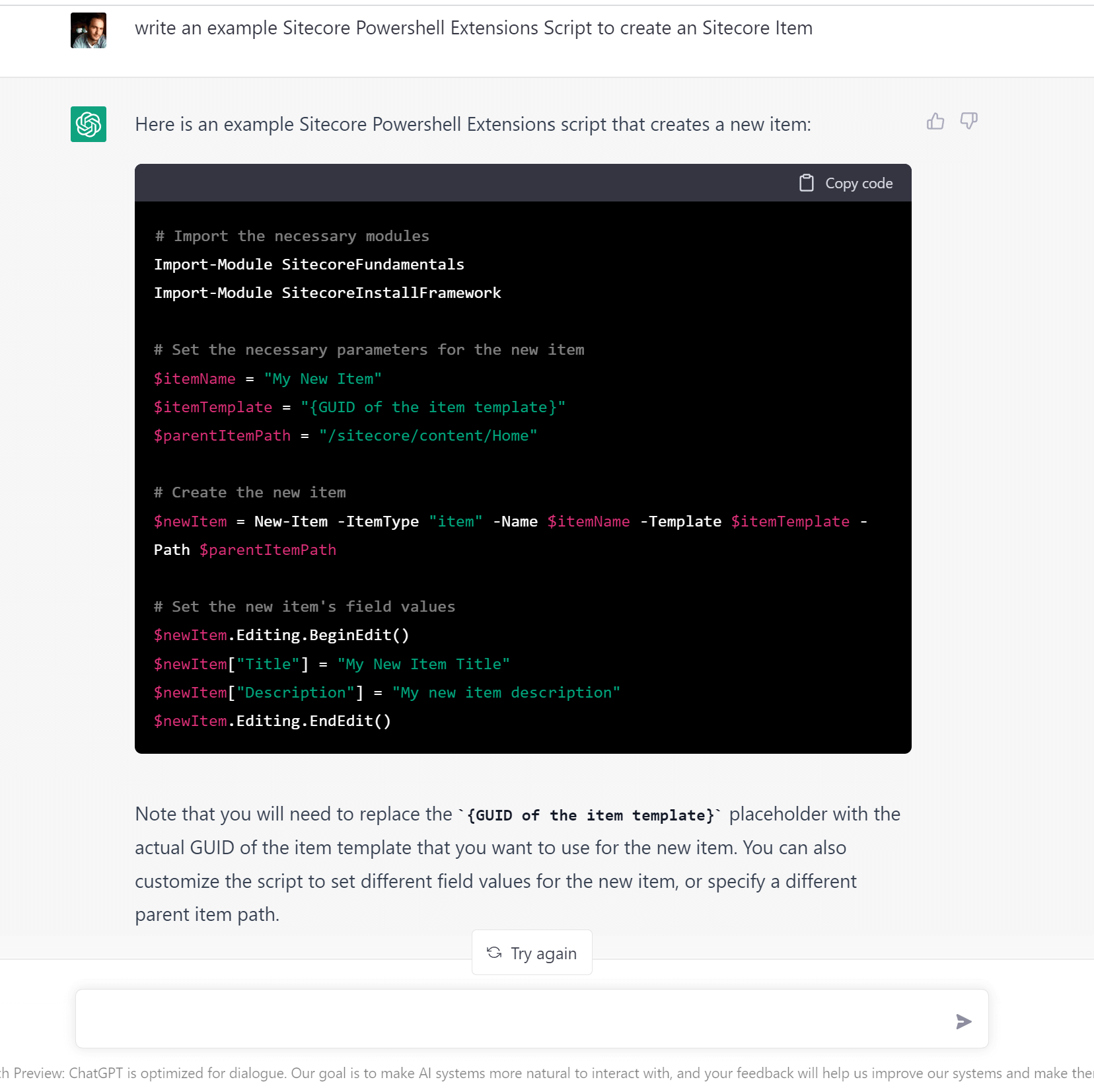

After trying AltCover for a while and struggling to get the filtering working on various dlls I decided to try Coverlet. Coverlet seems to be the defecto standard and is included by default in ASP.NET 6.0+ projects and .NET Core projects in Visual Studio now.

As our Sitecore 10.3 project is traditional MVC, we are tied to .NET 4.8 framework. Also our projects are fairly legacy and have been upgraded a few times. Therefore it’s not possible to install Coverlet as an NuGet package within the test projects and use MSBuild as Id like to have. It seems this is only possible for newer SDK style projects or .NET core ones and not .NET Framework classic projects. So I had to instead go for using the Coverlet console – which in the end worked pretty well.

How do I use it?

So first you need to install it the coverlet console globally like so:

dotnet tool install --global coverlet.console

Then for each of your test projects you need to execute a command like so:

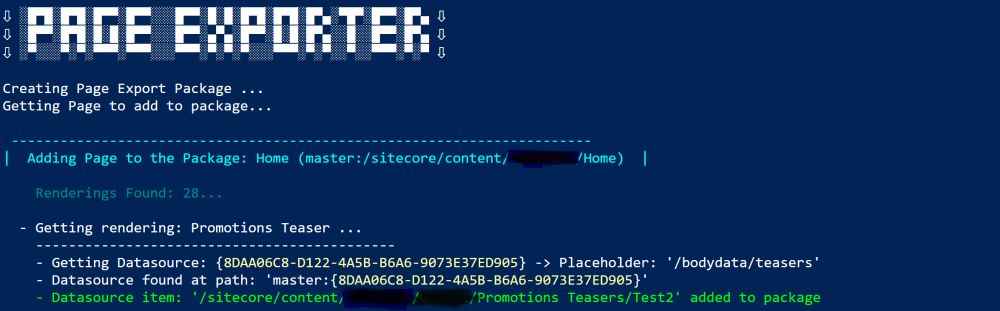

coverlet "C:\Projects\sc103-flux\src\Foundation\Accounts\Tests\bin\FluxDigital.Foundation.Accounts.Tests.dll" --target "C:\Program Files (x86)\Microsoft Visual Studio\2019\Community\Common7\IDE\Extensions\TestPlatform\vstest.console" --targetargs "C:\Projects\sc103-flux\src\Foundation\Accounts\Tests\bin\FluxDigital.Foundation.Accounts.Tests.dll /Parallel /Logger:TRX" --output "C:\Projects\sc103-flux\coverlet\coverlet-report1.cobertura" --format cobertura --include "[FluxDigital.*]*" --verbosity detailedWhat this does is pass your test project dll to Coverlet and tell it to run Xunit to execute the tests. We also send some Params to XUnit to ensure it runs the the tests in parallel and logs out to the console. Lastly we pass some Params to the coverlet to tell it to filter on certain dlls as – otherwise it seems to try and monitor/test 3rd party dlls as well as our code. If you get any errrors in the console it might be because you are not filtering everything out you need to.

So to break it down in more detail:

- coverlet – runs the coverlet console

- “..\FluxDigital.Foundation.Accounts.Tests.dll” – this is the test project dll to run codecoverage on

- –target ..\vstest.console” – the path to the VSTest console, ensure this path is correct for your version of Visual Studio

- /Parallel – runs the tests in VSTest in Parallel

- /Logger:TRX – log out details to the console from VSTest

- –targetargs “..\FluxDigital.Foundation.Accounts.Tests.dll” – the path to the dll your are testing again. This time for VSTest

- –output “..\coverlet-cobertura1.cobertura” – the report file saved at the end of the test run.

- –format cobertura – format for the above report file (this format allows us to merge the files from different test runs)

- –include “[FluxDigital.]” – this paramater lets you filter out assemblies (dlls) and/or method to include by name. In my case I only want to include the CodeCoverage of dlls that start with “FluxDigital.” so this filters to just include these. I think you can actually add multiple include params if you wish (see below).

- –exclude “[]Model” –exclude “[FluxDigital.Foundation.Models]” –exclude “[]Controller*” – I’m not actually using these filters in my command above but if you want to you add multiple exclude parameters, e.g to exclude any Models or Controllers from Coverlet for example.

- –verbosity detailed – This tells Coverlet to output a lot of detail when running the code coverage, it’s really useful for debugging any issues.

I found some info here on include/exclude filtering and it was really helpful. Essentially patterns in brackets [my.dll.name] are assemblies and patterns outside of brackets “*my.class.name” are classes/methods.

Once it runs you will get a code coverage report which you will note is In the cultura format. The reason for this is that we want to merge all of our tests into one code coverage file and other formats don’t work for this. More on this later.

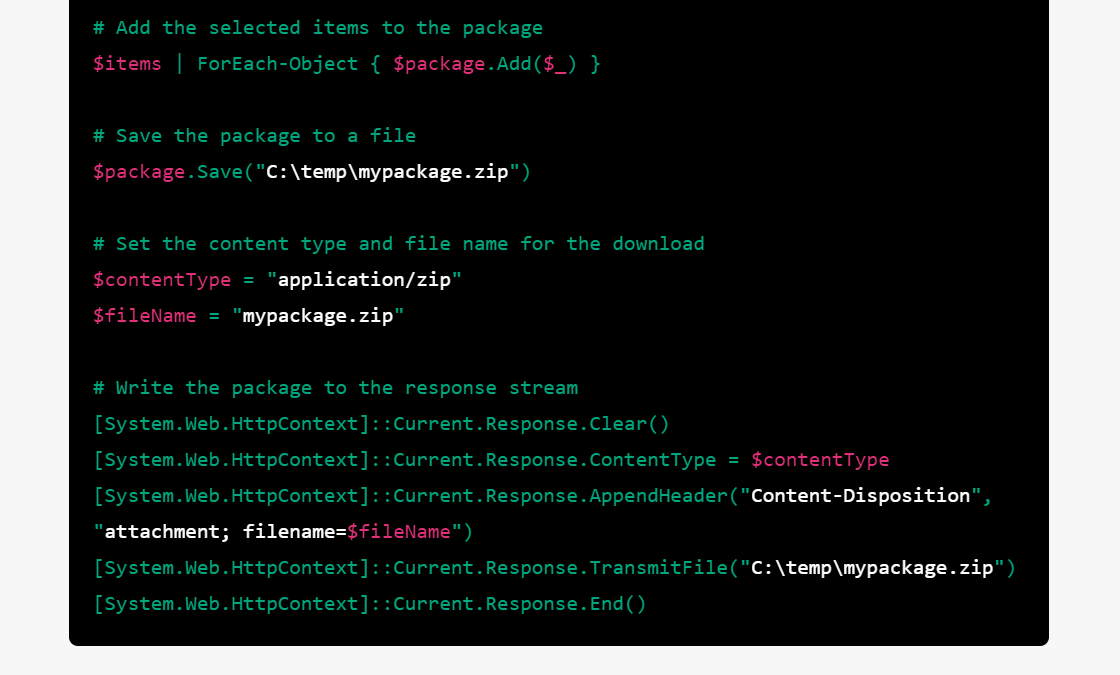

You need to run a similar command (change the test dll and report name) for each test library and save the code coverage file out with a different name but in the same folder. In my case this was 9 test projects and therefore 9 code coverage files generated. Like so:

Running this 9 times in our build pipeline isn’t going to cut it, so you will see I solved this later using PowerShell to find all test dlls and run these commands automatically – but I wanted to explain how this works more simply first.

Report Generator

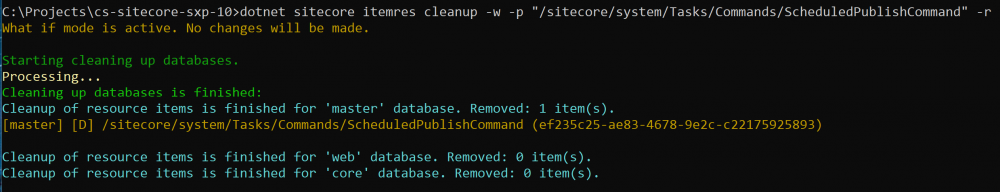

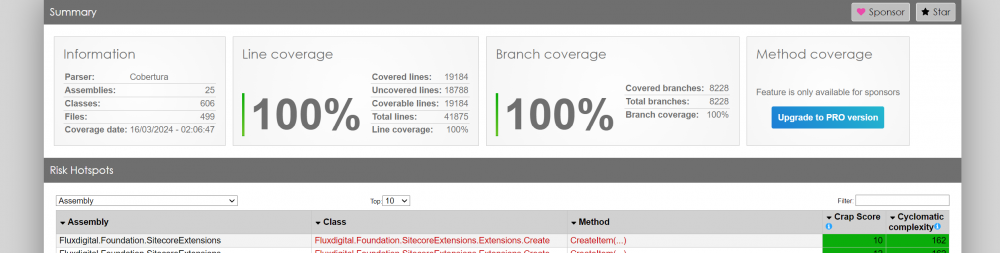

To merge them I used ReportGenerator. We will also use this tool later to upload the report to CodeCov. First we need to install it like so:

dotnet tool install -g dotnet-reportgenerator-globaltoolThen with the following command we can merge the files (ensure the path is correct to find your individual cobertura report files):

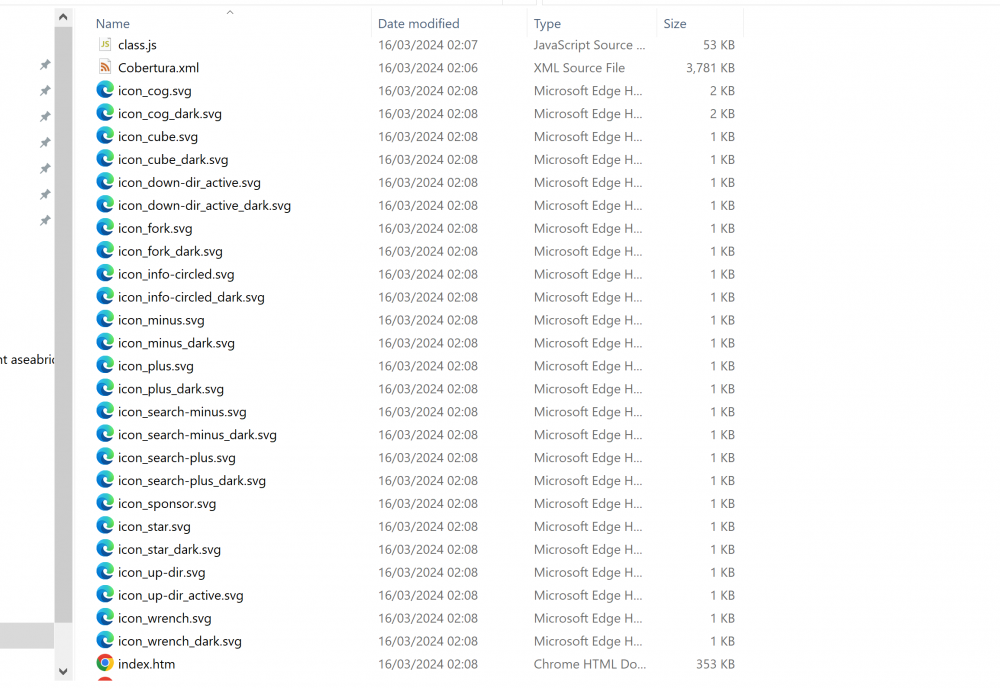

reportgenerator "-reports:C:\Projects\sc103-flux\coverlet\*.cobertura" "-targetdir:C:\Projects\sc103-flux\coverlet\report" -reporttypes:CoberturaThis gives us an Cobertura xml file with all code coverage data blended into one and generates an html report from it.

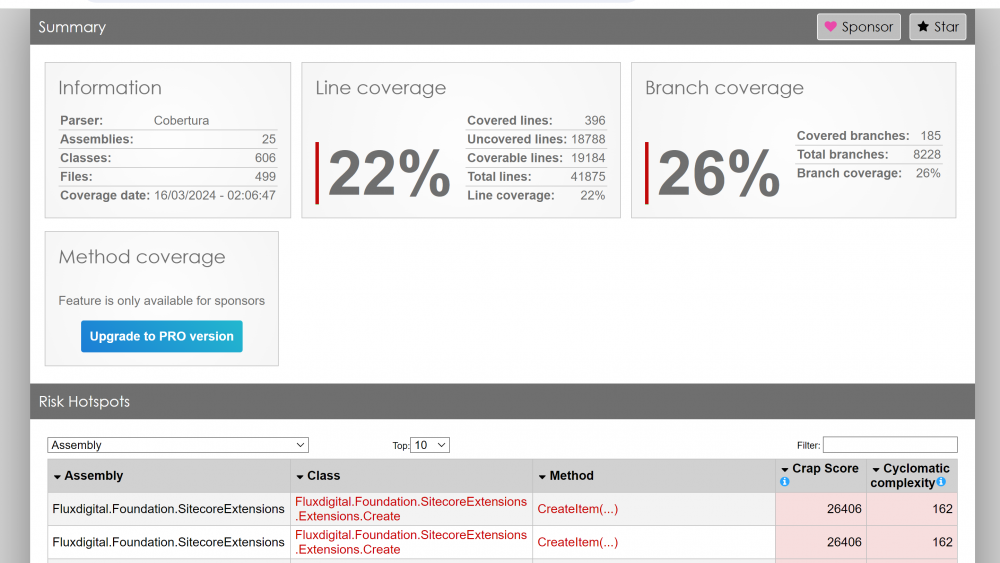

If you open up the index.html file in your browser you will see a summary of your Code Coverage at the top and then a breakdown by Assembly below that. Hmm 22%, not great at all. We have some work to do here to improve this, but that’s a job for another day.

This report is pretty neat though and is already enough for you to see where the gaps are in your coverage so you can decide where you need to add more tests.

Putting everything into Github Actions

The next step is to run this in the build pipeline (in our case Github Actions) and use Report Generator to send the file to CodeCov.

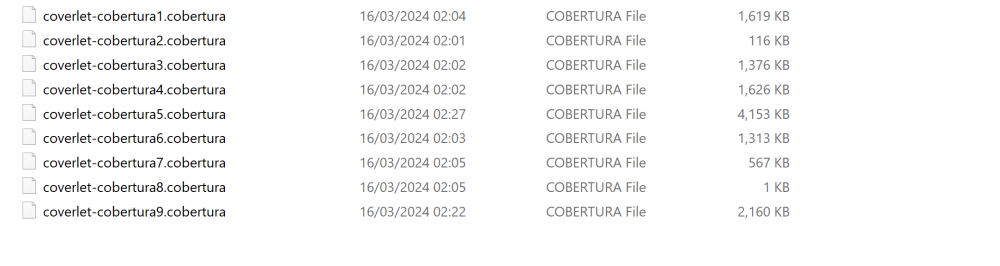

Running Coverlet via PowerShell for all Test Projects

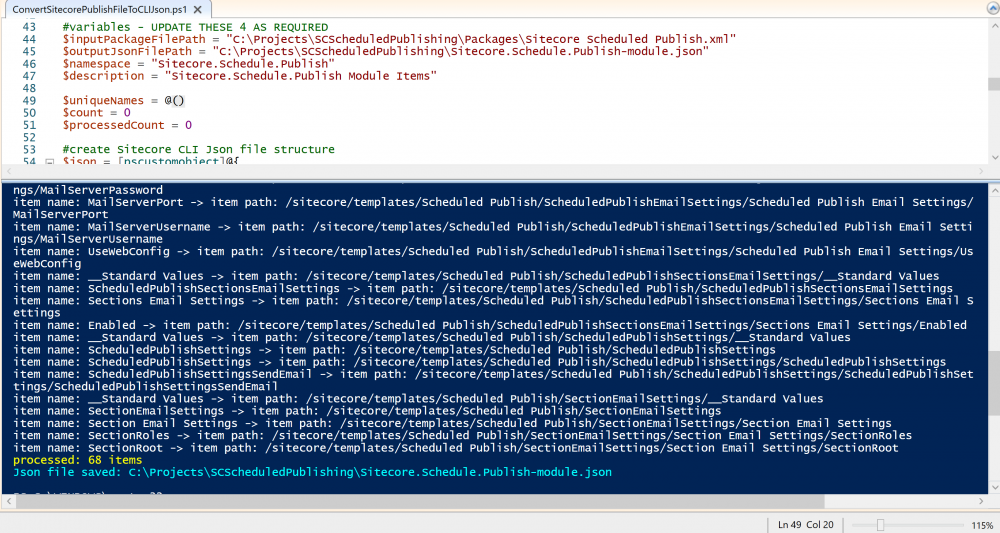

A mentioned earlier in order to make this simpler to run in the build pipeline and maintainable I decided to write an PowerShell script which finds all test dlls that match a specific pattern (it ensures an unique list) and then executes the coverlet command (from above) for each dll in turn with VSTest Console.

This is what I came up with:

$basePath = "."

$reportPath = "coverlet"

$incNamePattern = "*Fluxdigital*test*.dll"

$incVSTestNamePattern = "[Fluxdigital.*]*"

#get all test dlls in the solution - filter here to reduce duplicates

$testdlls = (Get-ChildItem $basePath -include $($incNamePattern) -recurse | ? {$_.FullName -match 'Release' -and $_.FullName -notmatch 'obj' -and $_.FullName -notmatch 'LocalPublish'}).FullName

#write-host "$($testdlls.Count) test dlls found..."

[System.Collections.ArrayList]$uniquedlls = @()

#ensure we only get each test dll once by adding them to an arraylist

foreach ($testdll in $testdlls){

$fileName = [System.IO.Path]::GetFileName($testdll)

#write-host "checking for $($fileName)"

if($uniquedlls -match $fileName){

#write-host "allready in array"

}

else{

$uniquedlls.Add($testdll) | out-null

}

}

#run coverlet for each test dll in the list

write-host "$($uniquedlls.Count) unique test dlls found..."

foreach ($uniquedll in $uniquedlls){

$fileName = [System.IO.Path]::GetFileName($uniquedll)

$cmd = @"

coverlet $($uniquedll) --target "vstest.console.exe" --targetargs "$($uniquedll)" --output "$($reportPath)\coverlet-$($fileName.Replace('.dll','')).cobertura" --format cobertura --include "$($incVSTestNamePattern)" --verbosity detailed

"@

write-host "running tests for: $($fileName) - report path: $($reportPath)\coverlet-$($fileName.Replace('.dll','')).cobertura"

$($cmd) | cmd

}This is used in the Github Action below so you will need to update the $incNamePattern and $incVSTestNamePattern to match your test dlls when using it in your Github workflow. You could obviously just use it locally to generate a report too.

The Final Github Actions YAML

In order to use Coverlet, VS Test, Report Generator in Github Actions I needed to add some steps in the build pipeline to install the tools. I also wanted to show the code coverage in the Github action summary so eventually found an market place action that would do that (and work with Windows runners) and then finally an action to send the report to Code Cov. Note you will need to update this action with your repo details and Code Cov token (in secrets).

Please review all the settings below too before trying this in your CI pipeline:

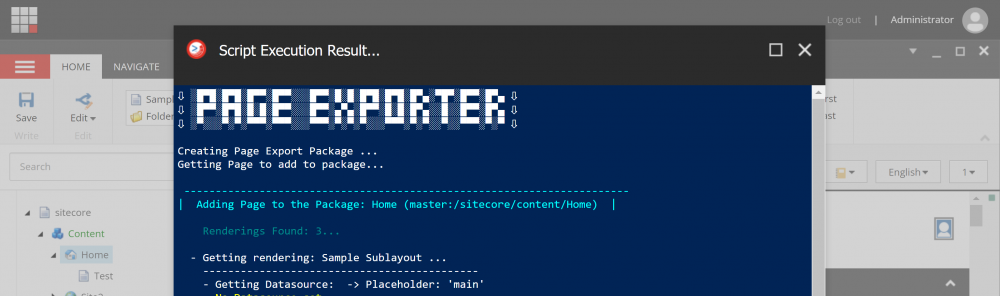

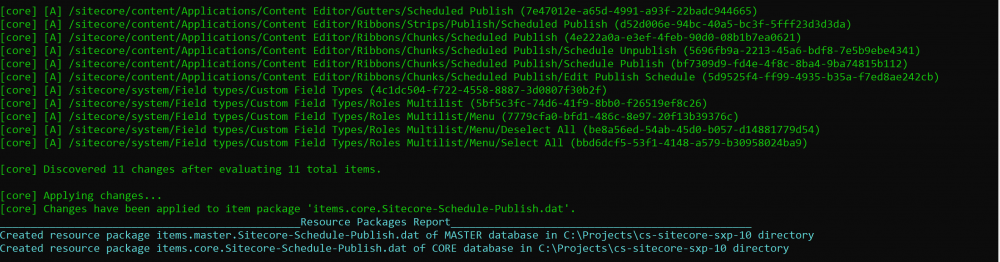

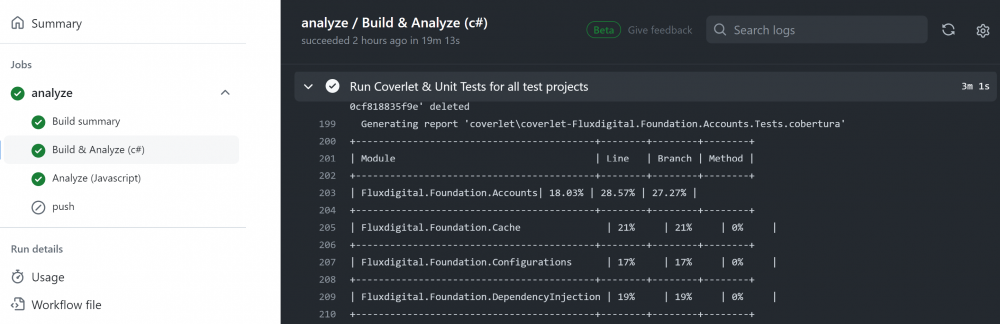

Just like running Coverlet locally from the command line you get a summary as it runs in Github too so it’s easy to debug any issues:

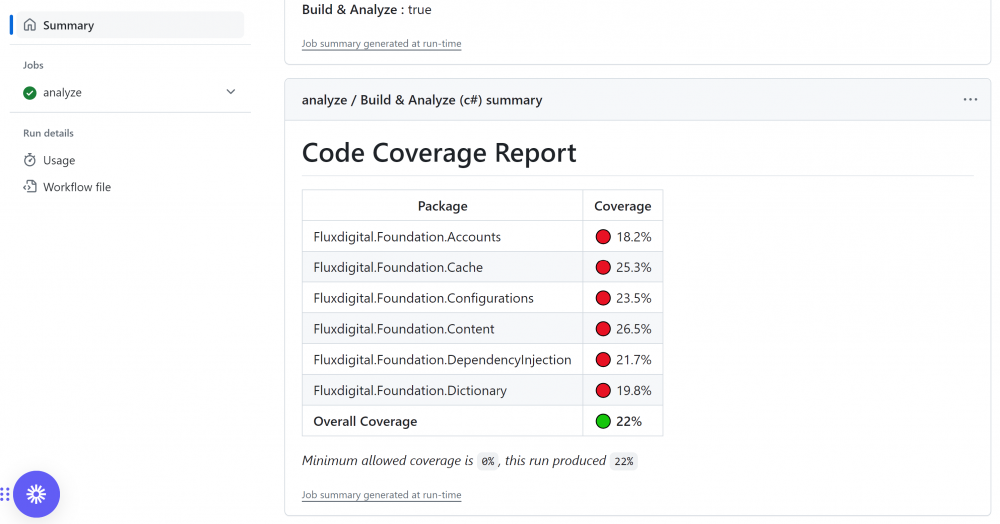

The report summary looks like so, pretty cool I think. You can configure this to work for PRs too if you wish.

Once you have this all working you may need to reduce the log levels so it’s not as noisy in the console.

Incidentally AltCover seems very clever and if you can get it to work correctly for you might be better than Coverlet, so give it a try also if you have time.

Hopefully this is useful for others who need to get Code Coverage setup for legacy Sitecore MVC projects (or other older .NET Framework projects). I’m sure a very similar approach would work in Azure Devops or other CI/CD platforms too. I’m off to write some more Unit tests.

As always there were a lot of useful links out there that helped me with this in addition to the ones I’ve included above:

https://blog.ndepend.com/guide-code-coverage-tools/

https://medium.com/@justingoldberg_2282/setting-up-code-coverage-with-net-xunit-and-teamcity-for-a-solution-with-multiple-test-projects-5d0986db788b